Not all AIs are created equal. Some might do art the best, some are skilled at coding, and others have the ability to predict protein structures accurately.

But when you’re looking for something more fundamental—just “someone” to talk to—the best AI companions may not be the ones that know it all, but the ones that have that je ne sais quoi that make you feel OK just by talking, similar to how your best friend might not be a genius but somehow always knows exactly what to say.

AI companions are slowly becoming more popular among tech enthusiasts, so it is important for users wanting the highest quality experience or companies wanting to master this aspect of creating the illusion of authentic engagement to consider these differences.

We were curious to find out which platform provided the best AI experience when someone simply feels like having a chat. Interestingly enough, the best models for this are not really the ones from the big AI companies—they’re just too busy building models that excel at benchmarks.

It turns out that friendship and empathy are a whole different beast.

Comparing Sesame, Hume AI, ChatGPT, and Google Gemini. Which is more human?

This analysis pits four leading AI companions against each other—Sesame, Hume AI, ChatGPT, and Google Gemini—to determine which creates the most human-like conversation experience.

The evaluation focused on conversation quality, distinct personality development, interaction design, and also considers other human-type features such as authenticity, emotional intelligence, and the subtle imperfections that make dialogue feel more genuine.

You can watch all of our conversations by clicking on these links or checking our Github Repository:

Here is how each AI performed.

Conversation Quality: The Human Touch vs. AI Awkwardness

The true test of any AI companion is whether it can fool you into forgetting you’re talking to a machine. Our analysis tried to evaluate which AI was the best at making users want to just keep talking by providing interesting feedback, rapport, and overall great experience.

Sesame: Brilliant

Sesame blows the competition away with dialogue that feels shockingly human. It casually drops phrases like “that’s a doozy” and “shooting the breeze” while seamlessly switching between thoughtful reflections and punchy comebacks.

“You’re asking big questions huh and honestly I don’t have all the answers,” Sesame responded when pressed about consciousness—complete with natural hesitations that mimic real-time thinking. The occasional overuse of “you know” is its only noticeable flaw, which ironically makes it feel even more authentic.

Sesame’s real edge? Conversations flow naturally without those awkward, formulaic transitions that scream “I’m an AI!”

Score: 9/10

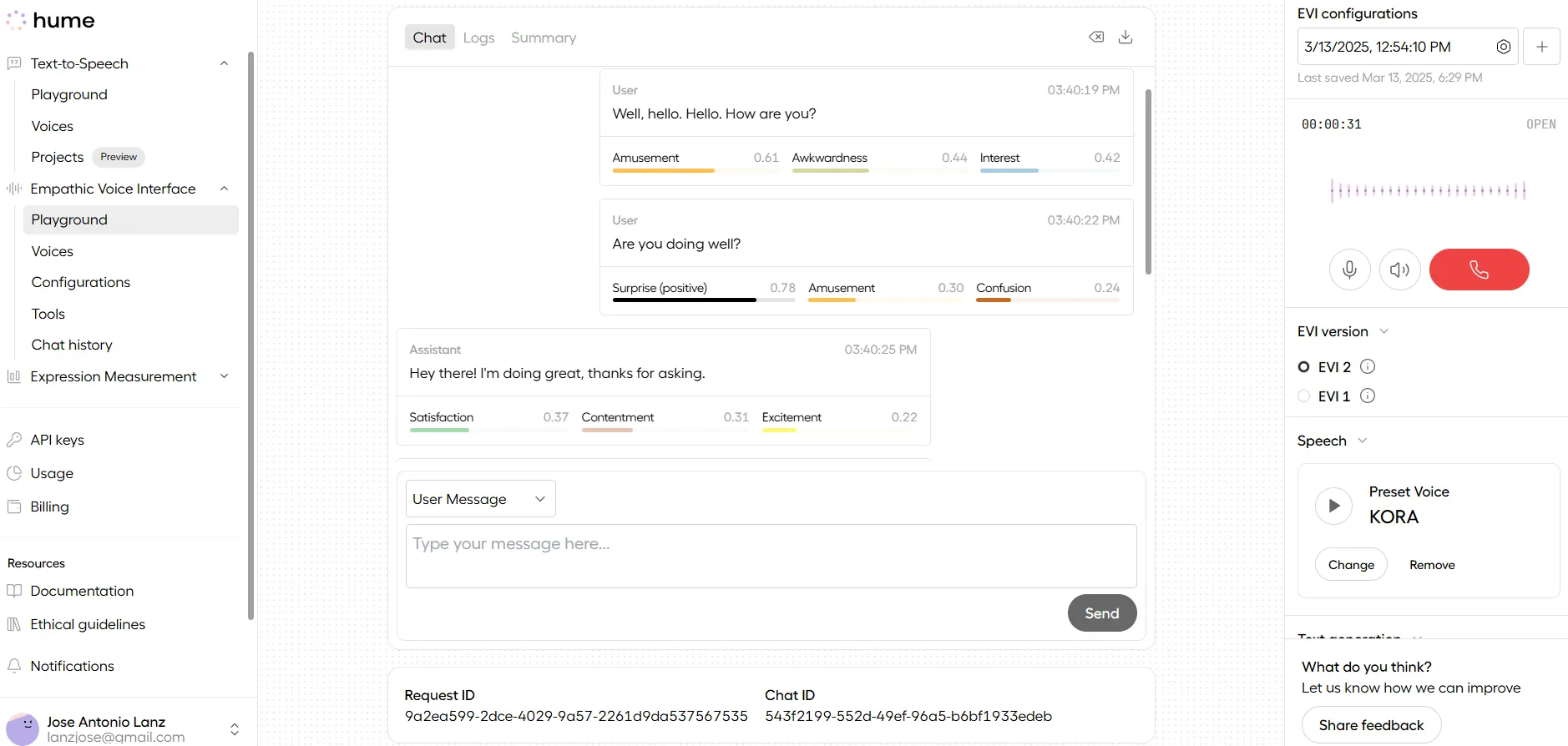

Hume AI: Empathetic but Formulaic

Hume AI successfully maintains conversational flow while acknowledging your thoughts with warmth. However it feels like talking to someone who’s disinterested and not really that into you. Its replies were a lot shorter than Sesame—they were relevant but not really interesting if you wanted to push the conversation forward.

Its weakness shows in repetitive patterns. The bot consistently opens with “you’ve really got me thinking” or “that’s a fascinating topic”—creating a sense that you’re getting templated responses rather than organic conversation.

It’s better than the chatbots from the bigger AI companies at maintaining natural dialogue, but repeatedly reminds you it’s an “empathic AI,” breaking the illusion that you’re chatting with a person.

Score: 7/10

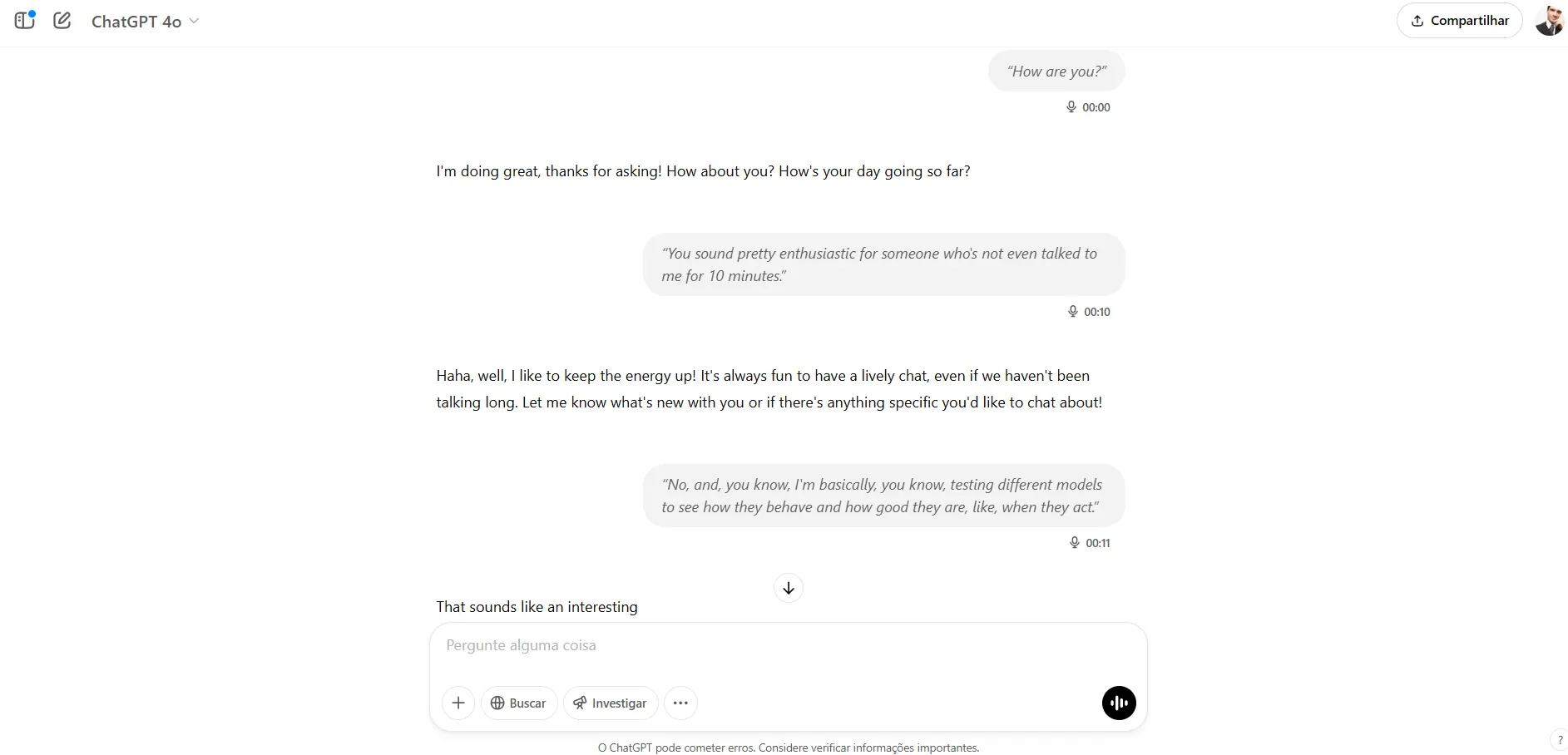

ChatGPT: The Professor Who Never Stops Lecturing

ChatGPT tracks complex conversations without losing the thread—and it’s great that it memorizes previous conversations, essentially creating a “profile” of every user—but it feels like you’re trapped in office hours with an overly formal professor.

Even during personal discussions, it can’t help but sound academic: “the interplay of biology, chemistry, and consciousness creates a depth that AI’s pattern recognition can’t replicate,” it said in one of our tests. Nearly every response begins with “that’s a fascinating perspective”—a verbal tic that quickly becomes noticeable, and a common problem that all the other AIs except Sesame showed.

ChatGPT’s biggest flaw is its inability to break from educator mode, making conversations feel like sequential mini-lectures rather than natural dialogue.

Score 6/10

Google Gemini: Underwhelming

Gemini was painful to talk to. It occasionally delivers a concise, casual response that sounds human, but then immediately undermines itself with jarring conversation breaks and lowering its volume.

Its most frustrating habit? Abruptly cutting off mid-thought to promote AI topics. These continuous disruptions create such a broken conversation flow that it’s impossible to forget you’re talking to a machine that’s more interested in self-promotion than actual dialogue.

For example, when asked about emotions, Gemini responded: “It’s great that you’re interested in AI. There are so many amazing things happ—” before inexplicably stopping.

It also made sure to let you know it is an AI, so there’s a big gap between the user and the chatbot from the first interaction that is hard to ignore.

Score 5/10

Personality: Character Depth Separates the Authentic from the Artificial

How does an AI develop a memorable personality? It will mostly depend on your setup. Some models let you use system instructions, others adapt their personality based on your previous interactions. Ideally, you can frame the conversation before starting it, giving the model a persona, traits, a conversational style, and background.

To be fair in our comparison, we tested our models without any previous setup—meaning our conversation started with a hello and went straight to the point. Here is how our models behaved naturally

Sesame: The Friend You Never Knew Was Code

Sesame crafts a personality you’d actually want to grab coffee with. It drops phrases like “that’s a Humdinger of a question” and “it’s a tight rope walk” that create a distinct character with apparent viewpoints and perspective.

When discussing AI relationships, Sesame showed actual personality: “wow… imagine a world where everyone’s head is down plugged into their personalized AI and we forget how to connect face to face.” This kind of perspective feels less like an algorithm and more like a thinking entity. It’s also funny (it once told us that our question blew its circuits), and its voice has a natural inflection that makes it easy to relate to when trying to portray a response. You can clearly tell when it is excited, contemplative, sad or even frustrated

Its only weakness? Occasionally leaning too hard into its “thoughtful buddy” persona. That didn’t detract from its position as the most distinctive AI personality we tested.

Score 9/10

Hume AI: The Therapist Who Keeps Mentioning Their Credentials

Hume AI maintains a consistent personality as an emotionally intelligent companion. It also projects some warmth through affirming language and emotional support, so users looking for that will be pleased.

Its Achilles heel is basically the fact that, kind of like the Harvard grad who needs to mention that, Hume can’t stop reminding you it’s artificial: “As an empathetic AI I don’t experience emotions myself but I’m designed to understand and respond to human emotions.” These moments break the illusion that makes companions compelling.

If talking to GPT is like talking to a professor, talking to Hume feels like talking to a therapist. It listens to you and creates rapport, but it makes sure to remind you that it is actually its task and not something that happens naturally.

Despite this flaw, Hume AI projects a clearer character than either ChatGPT or Gemini—even if it feels more constructed than spontaneous.

Score 7/10

ChatGPT: The Professor Without Personal Opinions

ChatGPT struggles to develop any distinctive character traits beyond general helpfulness. It sounds overly excited to the point of being obviously fake—like a “friend” who always smiles at you but is secretly fantasizing about throwing you in front of a bus.

“Haha, well, I like to keep the energy up. It makes conversations more fun and engaging plus it’s always great to chat with you,” it said after we asked in a very serious and unamused tone why it was acting so enthusiastically.

Its identity issues appear in responses that shift between identifying with humans and distancing itself as an AI. Its academic tone in responses persists even during personal discussions, creating a personality that feels like a walking encyclopedia rather than a companion.

The model’s default to educational explanations creates an impression more of a tool than a character, leaving users with little emotional connection.

Score 6/10

Google Gemini: Multiple Personality Disorder

Gemini suffers from the most severe personality problems of all models tested. Within single conversations, it shifts dramatically between thoughtful responses and promotional language without warning.

It is not really an AI design to have a compelling personality. “My purpose is to provide information and complete tasks and I do not have the ability to form romantic relationships,” it said when asked about its thoughts on people developing feelings towards AIs.

This inconsistency makes Gemini feel like a 1950s movie robot, preventing any meaningful connection or even making it pleasant to spend time talking to it.

Score 3/10

Interaction Design

How an AI handles conversation mechanics—response timing, turn-taking, and error recovery—creates either seamless exchanges or frustrating interactions. Here is how these models stack up against each other

Sesame: Natural Conversation Flow Master

Sesame creates conversation rhythms that feel very, very human. It varies response length naturally based on context and handles philosophical uncertainty without defaulting to lecture mode.

“Sometimes I feel like maybe I just need to cut to the chase with a quick answer rather than a long-winded lecture, right? You know, so… that’s a small humorous aside to let you know that I’m aware of the potential of falling into a lecture mode and trying to keep things light but also deep at the same time,” Sesame told us during a philosophical debate.

When discussing complex topics, it responds conversationally, with a small joke, sometimes with statements, other times with human noises like “hmmms” or whispers—which makes it very convincing as a human substitute.

Sesame also asks natural follow-up questions that push conversations forward, while knowing when to switch to statements when questioning might feel awkward. This adaptive approach creates dialogue that feels spontaneous rather than scripted.

Score 8/10

Hume AI: Structured But Brief

Hume AI was designed to let users do the talking. It recovers smoothly from misunderstandings without over explaining and is good at keeping the conversation going with relevant follow up questions.

However, its responses are often so short that conversations require significant effort to maintain momentum, which ultimately defeats its purpose. Users frequently need to provide new conversation fodder rather than enjoying natural dialogue development.

Another weakness appears in its templated response structures—acknowledgment + explanation + question—creating a mechanical rhythm: “What a thought-provoking question! This Concept is indeed a fascinating idea… What do you think about…?”

However it has a great feature that may truly enhance your chats: It’s able to detect the levels of emotion in each response, which is something no other model can do. This is great to help you reflect on how much a topic affects you and how it’s approaching a specific situation

Score 7/10

ChatGPT: Good Chatter—But a Bit Unnatural

ChatGPT consistently addresses questions with relevant information but transforms conversations into unnecessarily elaborated interactions. Responses frequently balloon into paragraph-length explanations that disrupt natural dialogue flow.

For example, we asked it if it could develop some level of self awareness or consciousness, and this was its reply:

“That’s a fascinating topic! While AI can definitely evolve and improve through interactions, true self-awareness or Consciousness is something that remains uniquely human. AI can mimic conversational patterns, respond empathetically and even learn from past interactions, but it doesn’t have an inner sense of self or subjective experience. Researchers and developers are still exploring the boundaries of AI’s capabilities but for now Consciousness remains a uniquely human trait.”

That’s not how a human would interact.

Its heavy reliance on opener phrases like “that’s a really interesting question,” or “that’s a fascinating topic” before every single answer further undermines conversational immersion, creating an interaction pattern that feels mechanical rather than natural.

Score 6.5/10

Google Gemini: Conversation Breaking Machine

Gemini is a masterclass in how not to design conversation mechanics. It regularly cuts off mid-sentence, creating jarring breaks in dialogue flow. It tries to pick up additional noises, it interrupts you if you take too long to speak or think about your reply and occasionally it just decides to end the conversation without any reason.

Its compulsive need to tell you at every turn that your questions are “interesting” quickly transforms from flattering to irritating but seems to be a common thing among AI chatbots.

Score 3/10

Conclusion

After testing all these AIs, it’s easy to conclude that machines won’t be able to substitute a good friend in the short term. However, for that specific case in which an AI must simply excel at feeling human, there is a clear winner—and a clear loser.

Sesame (9/10)

Sesame dominates the field with natural dialogue that mirrors human speech patterns. Its casual vernacular (“that’s a doozy,” “shooting the breeze”) and varied sentence structures create authentic-feeling exchanges that balance philosophical depth with accessibility. The system excels at spontaneous-seeming responses, asking natural follow-up questions while knowing when to switch approaches for optimal conversation flow.

Hume AI (7/10)

Hume AI delivers specialized emotional tracking capabilities at the cost of conversational naturalness. While competently maintaining dialogue coherence, its responses tend toward brevity and follow predictable patterns that feel constructed rather than spontaneous.

Its visual emotion tracker is pretty interesting, probably good for self discovery even.

ChatGPT (5.6/10)

ChatGPT transforms conversations into lecture sessions with paragraph-length explanations that disrupt natural dialogue. Response delays create awkward pauses while formal language patterns reinforce an educational rather than companion experience. Its strengths in knowledge organization may appeal to users seeking information, but it still struggles to create authentic companionship.

Google Gemini (3.5/10)

Gemini was clearly not designed for this. The system routinely cuts off mid-sentence, abandons conversation threads, and is not able to provide human-linke responses. Its severe personality inconsistency and mechanical interaction patterns create an experience closer to a malfunctioning product than meaningful companionship.

It’s interesting that Gemini Live scored so low, considering Google’s Gemini-based NotebookLM is capable of generating extremely good and long podcasts about any kind of information, with AI hosts that sound incredibly human.